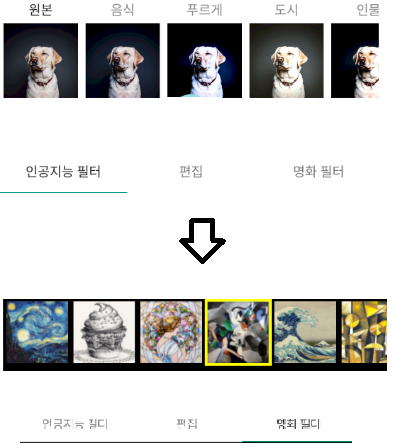

안녕하세요 사진필터 프로그램을 만들고 있는 사람입니다. 두 오픈소스를 결합하여 프로그램을 만들고자 합니다.

인공지능 필터 툴바에는 라이브러리를 활용하여 필터를 넣는 소스코드를 찾았습니다. https://www.androidhive.info/2017/11/android-building-image-filters-like-instagram/

https://github.com/ravi8x/AndroidPhotoFilters

그리고 명화필터에 tensolflow를 활용한 명화를 명화필터에 넣으려고 하는데 도무지 감이 잡히질 않습니다. ㅠㅠㅠ ( 명화필터에 있는 그림은 합성한것입니다.) https://github.com/cruzsoma/Fast_Style_Transfer_App

글 작성이 최대 8000byte밖에 안되서 코드를 지운것이 많습니다.

이건 라이브러리를 활용하는것이 아닌거 같습니다. 도와주십시오..

package cruzsoma.ai.cnn.fast_style_transfer_app;

public class CameraActivity extends AppCompatActivity {TensorFlowInferenceInterface tensorFlowInferenceInterface;

private static final int INPUT_IMAGE_SIZE = 256;

private static final int INPUT_IMAGE_SIZE_WIDTH = 256;

private static final int INPUT_IMAGE_SIZE_HEIGHT = 256;

private static final String INPUT_NAME = "input";

private static final String OUTPUT_NAME = "output_new";

private int[] imageIntValues;

private float[] imageFloatValues;

private int[] resIds = {R.drawable.starry, R.drawable.ink, R.drawable.mosaic, R.drawable.udnie, R.drawable.wave, R.drawable.cubist, R.drawable.feathers};

private String[] modelNameList = {"starry", "ink", "mosaic", "udnie", "wave", "cubist", "feathers"};

// 필터 배열로 나열 하는거 같음

protected ArrayList<Model> initModelsConfig() {

ArrayList<Model> models = new ArrayList<>();

for (int i = 0; i < modelNameList.length; i++) {

Model model = new Model();

model.type = i;

model.iconRes = resIds[i];

model.modelName = modelNameList[i];

models.add(model);

}

return models;

}

imageIntValues = new int[INPUT_IMAGE_SIZE_WIDTH * INPUT_IMAGE_SIZE_HEIGHT];

imageFloatValues = new float[INPUT_IMAGE_SIZE_WIDTH * INPUT_IMAGE_SIZE_HEIGHT * 3];

ArrayList<Model> modelsConfig = initModelsConfig();

StyleButtonAdapter styleButtonAdapter = new StyleButtonAdapter(CameraActivity.this, modelsConfig);

recyclerView.setAdapter(styleButtonAdapter);

cameraKitView.onStart();

facingButton.setOnTouchListener(facingButtonTouchListener);

flashButton.setOnTouchListener(flashButtonTouchListener);

captureButton.setOnTouchListener(captureButtonTouchListener);

styleSplitorLayout.setOnTouchListener(styleSplitorTouchListener);

styleSplitButton.setOnTouchListener(styleSpiltButtonTouchListener);

refreshButton.setOnTouchListener(refreshButtonTouchListener);

styleButtonAdapter.buttonSetOnclick(new StyleButtonAdapter.ButtonInterface() {

@Override

public void onclick(View view, Model model) {

Toast toast = Toast.makeText(CameraActivity.this, "이 필터 골랐네요:" + model.modelName, Toast.LENGTH_SHORT);

toast.setGravity(Gravity.BOTTOM, 0, 350);

toast.show();

selectedModel = model.modelName;

}

});

}

private void cropPictureLeft() {

float x = styleSplitorLayout.getX() + styleSplitor.getX();

float rate = x / imageOverlayLayout.getWidth();

int imageWidth = captureImageBitMap.getWidth();

int imageHeight = captureImageBitMap.getHeight();

int width = (int) (imageWidth * rate);

if (width <= 0) {

pictureLeft.setLayoutParams(new RelativeLayout.LayoutParams(0, pictureLeft.getHeight()));

} else {

Bitmap bitmap = Bitmap.createBitmap(captureImageBitMap, 0, 0, width, imageHeight);

pictureLeft.setLayoutParams(new RelativeLayout.LayoutParams((int) x, pictureLeft.getHeight()));

pictureLeft.setImageBitmap(bitmap);

}

}

private View.OnTouchListener styleSplitorTouchListener = new View.OnTouchListener() {

@Override

public boolean onTouch(View view, MotionEvent motionEvent) {

RelativeLayout.LayoutParams params = (RelativeLayout.LayoutParams) view.getLayoutParams();

switch (motionEvent.getAction()) {

case MotionEvent.ACTION_MOVE:

params.leftMargin = params.leftMargin + (int) motionEvent.getX();

view.setLayoutParams(params);

cropPictureLeft();

break;

case MotionEvent.ACTION_UP:

params.leftMargin = params.leftMargin + (int) motionEvent.getX();

view.setLayoutParams(params);

break;

}

return true;

}

};

void changeViewImageResource(final ImageView imageView, @DrawableRes final int resId) {

imageView.setRotation(0);

imageView.animate()

.rotationBy(360)

.setDuration(400)

.setInterpolator(new OvershootInterpolator())

.start();

imageView.postDelayed(new Runnable() {

@Override

public void run() {

imageView.setImageResource(resId);

}

}, 120);

}

boolean handleViewTouchFeedback(View view, MotionEvent motionEvent) {

switch (motionEvent.getAction()) {

case MotionEvent.ACTION_DOWN: {

touchDownAnimation(view);

return true;

}

case MotionEvent.ACTION_UP: {

touchUpAnimation(view);

return true;

}

default: {

return true;

}

}

}

int inputWidth = input.getWidth();

int inputHeight = input.getHeight();

Matrix matrix = new Matrix();

matrix.postScale(((float) width) / inputWidth, ((float) height) / inputHeight);

Bitmap scaledBitmap = Bitmap.createBitmap(input, 0, 0, inputWidth, inputHeight, matrix, false);

// if (!input.isRecycled()) {

// input.recycle();

// }

return scaledBitmap;

}

private Bitmap imageStyleTransfer(Bitmap bitmap) {

Bitmap scaledBitmap = scaleBitmap(bitmap, INPUT_IMAGE_SIZE_WIDTH, INPUT_IMAGE_SIZE_HEIGHT);

scaledBitmap.getPixels(imageIntValues, 0, scaledBitmap.getWidth(), 0, 0, scaledBitmap.getWidth(), scaledBitmap.getHeight());

for (int i = 0; i < imageIntValues.length; ++i) {

final int val = imageIntValues[i];

imageFloatValues[i * 3 + 0] = ((val >> 16) & 0xFF) * 1.0f;

imageFloatValues[i * 3 + 1] = ((val >> 8) & 0xFF) * 1.0f;

imageFloatValues[i * 3 + 2] = (val & 0xFF) * 1.0f;

}

Trace.beginSection("feed");

tensorFlowInferenceInterface.feed(INPUT_NAME, imageFloatValues, INPUT_IMAGE_SIZE_WIDTH, INPUT_IMAGE_SIZE_HEIGHT, 3);

Trace.endSection();

Trace.beginSection("run");

tensorFlowInferenceInterface.run(new String[]{OUTPUT_NAME});

Trace.endSection();

Trace.beginSection("fetch");

tensorFlowInferenceInterface.fetch(OUTPUT_NAME, imageFloatValues);

Trace.endSection();

for (int i = 0; i < imageIntValues.length; ++i) {

imageIntValues[i] =

0xFF000000

| (((int) (imageFloatValues[i * 3 + 0])) << 16)

| (((int) (imageFloatValues[i * 3 + 1])) << 8)

| ((int) (imageFloatValues[i * 3 + 2]));

}

scaledBitmap.setPixels(imageIntValues, 0, scaledBitmap.getWidth(), 0, 0, scaledBitmap.getWidth(), scaledBitmap.getHeight());

return scaledBitmap;

}

}